- Ancient Whispers: When Humans First Dreamed of Thinking Machines

- The Pioneers: When Philosophy Met Mathematics

- The Dawn of Modern AI: When Nerds Got Serious

- The Golden Age: AI Optimism Reaches Fever Pitch

- The AI Winter: When Dreams Froze Over

- The AI Renaissance: From Winter to Spring

- The AI Explosion: When Machines Started Getting Scary Good

- Present Day: Living in the Future (Sort Of)

- The Future: To Infinity and Beyond (or Skynet?)

- Key Takeaways

- FAQ: Artificial Intelligence History and Future

- Further Reading

Buckle up, fellow carbon-based life forms (and any silicon-based entities sneakily reading this)! We’re about to embark on an epic journey through the history of artificial intelligence. It’s a tale filled with brilliant minds, audacious dreams, crushing disappointments, and stunning triumphs. Oh, and the occasional existential crisis about the nature of consciousness. You know, light stuff.

Ancient Whispers: When Humans First Dreamed of Thinking Machines

Long before we had Siri misunderstanding our requests for directions, our ancestors were cooking up some wild ideas about artificial beings:

Early Civilizations and Mythology

- Mesopotamia (c. 2000 BCE): The Epic of Gilgamesh features Enkidu, an artificial man created by the gods. Talk about setting the bar high for your first AI project!

- Ancient Greece (c. 800-500 BCE):

- Homer’s Iliad describes golden handmaidens created by Hephaestus, the divine blacksmith. Ancient Greek “smart home” devices, anyone?

- Pygmalion’s statue comes to life in Ovid’s Metamorphoses. The first instance of AI passing the Turing Test, perhaps?

- Ancient China (c. 300 BCE): Philosopher Mozi and his disciples discuss artificial intelligence in “A Dialogue on the Mechanical Man.”

Medieval and Renaissance Concepts

- Jewish folklore (c. 16th century CE): The golem, a clay creature brought to life, showcases the power and peril of creating artificial life. It’s basically “Frankenstein” with a Torah twist.

- Middle Ages and Renaissance:

- Mechanical automata like cuckoo clocks and intricate dolls sparked imaginations.

- Leonardo da Vinci designs a humanoid automaton (c. 1495). Because being a genius painter, sculptor, and inventor wasn’t enough.

The Pioneers: When Philosophy Met Mathematics

As we zoom into more recent centuries, big thinkers started laying the groundwork for modern AI:

Early Modern Thinkers

- 1637: René Descartes explores the possibility of thinking machines in “Discourse on the Method.” He concludes it’s impossible. (Spoiler alert: He might have been a bit hasty there.)

- 1726: Jonathan Swift’s “Gulliver’s Travels” features a machine that can write books on any subject. Eerily prescient or just really good satire?

19th Century Breakthroughs

- 1805: Lady Ada Lovelace is born. She’ll go on to write the first computer algorithm, earning her the title “Prophet of the Computer Age.”

- 1847: George Boole develops Boolean algebra, laying the foundation for computer logic. Without him, we’d all be stuck in a world of “maybe.”

Early 20th Century Concepts

- 1920s: Karel Čapek coins the term “robot” in his play “R.U.R.” It comes from the Czech word for “forced labor.” Cheery stuff.

The Dawn of Modern AI: When Nerds Got Serious (and Slightly Overconfident)

Now we’re cooking with gas (or electricity, rather). The 20th century saw AI transform from science fiction to scientific pursuit:

1940s and 1950s: Laying the Foundations

- 1943: Warren McCulloch and Walter Pitts publish “A Logical Calculus of the Ideas Immanent in Nervous Activity,” proposing the first mathematical model of a neural network.

- 1950: Alan Turing publishes “Computing Machinery and Intelligence,” introducing the Turing Test. It’s like a cosmic game of “Guess Who?” where one contestant might be made of silicon.

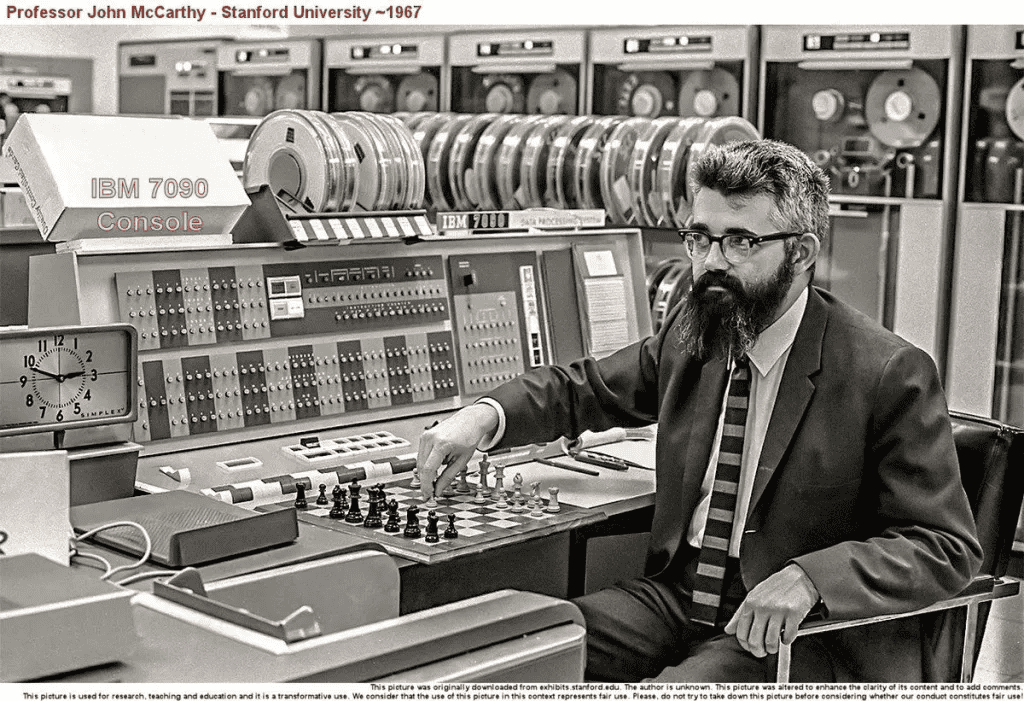

- 1956: The Dartmouth Conference officially kicks off the field of AI research. Attendees include John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. It’s basically the Avengers of early AI.

Late 1950s: AI Gains Momentum

- 1958: John McCarthy invents the LISP programming language, which becomes a favorite for AI research. It’s all about those parentheses, baby.

- 1959: Arthur Samuel coins the term “machine learning” and develops a checkers-playing program that can learn from experience. Take that, Connect Four!

The Golden Age: AI Optimism Reaches Fever Pitch

The 1960s and early 1970s saw AI researchers bursting with enthusiasm. Human-level AI seemed just around the corner. (Narrator: “It wasn’t.”)

1960s: Rapid Progress and Bold Predictions

- 1961: James Slagle develops SAINT, a program that can solve calculus problems. Finally, a way to avoid those pesky math homework assignments!

- 1964: Joseph Weizenbaum creates ELIZA, an early natural language processing program. Some users actually believe they’re talking to a real therapist. The first instance of AI-induced existential crisis?

- 1965: Herbert Simon predicts, “Machines will be capable, within twenty years, of doing any work a man can do.” (Spoiler: We’re still waiting on that one, Herb.)

- 1966: The movie “Fantastic Voyage” features a computer-aided surgery system. Hollywood, always ahead of the curve.

Late 1960s and Early 1970s: AI in Popular Culture and Real-World Applications

- 1968: “2001: A Space Odyssey” introduces HAL 9000, setting the bar impossibly high for AI assistants (and their potential for villainy).

- 1969: Shakey the Robot becomes the first mobile robot to reason about its actions. It’s basically the great-great-grandparent of your Roomba.

- 1973: The report “Lighthill” criticizes AI progress in the UK, leading to reduced funding. The first tremors of the AI Winter are felt.

The AI Winter: When Dreams Froze Over

Just when it seemed like we were on the cusp of creating our new robot overlords, progress stalled, and funding dried up faster than a drop of water in the Sahara.

1974-1980: The First AI Winter

- 1974-1980: Funding for AI research faces sharp cutbacks in the US and UK. AI researchers discover the joys of instant ramen and sad desk lunches.

1980s: Expert Systems and Their Limitations

- 1980: AI research focuses on expert systems, but they prove too narrow and brittle for widespread use. It turns out mimicking human expertise is harder than it looks. Who knew?

- 1987: The market for specialized AI hardware collapses. Turns out, general-purpose computers are pretty good at this AI stuff too.

Late 1980s and Early 1990s: Disillusionment Sets In

- 1988: Expert systems fall out of favor. Apparently, expertise is more than just a bunch of IF-THEN statements. Who’d have thunk it?

- 1993: The Sixth Generation Project in Japan concludes, marking the end of large AI-specific projects in the country. Sayonara, AI boom.

The AI Renaissance: From Winter to Spring (and Possibly Summer?)

Just when it seemed like AI might join pet rocks in the dustbin of history, things started heating up again:

1990s: The Web and New Possibilities

- 1991: The Web is born, eventually providing massive amounts of data for machine learning algorithms. Thanks, Tim Berners-Lee!

- 1997: IBM’s Deep Blue defeats world chess champion Garry Kasparov. Humans nervously eye their chess sets and ponder taking up checkers instead.

2000s: AI in the Home and New Breakthroughs

- 2002: iRobot launches the Roomba. Finally, a way to avoid vacuuming AND trip over a robot in your own home!

- 2006: Geoffrey Hinton coins the term “deep learning” and revitalizes interest in neural networks. The AI hype train starts picking up steam again.

2010-2015: AI Triumphs and Public Awareness

- 2011: IBM Watson wins Jeopardy! Humans nervously eye their trivia night trophies and consider a career in “Wheel of Fortune” instead.

- 2012: Google’s AI learns to recognize cats in YouTube videos. The internet collectively says, “Aww, it’s just like us!”

- 2014: Eugene Goostman, a chatbot posing as a 13-year-old Ukrainian boy, supposedly passes the Turing Test. Many experts remain skeptical, citing the well-known difficulty of distinguishing between AI and actual 13-year-olds.

- 2016: Google DeepMind’s AlphaGo defeats world champion Go player Lee Sedol. Board game enthusiasts worldwide begin to sweat.

The AI Explosion: When Machines Started Getting Scary Good

In recent years, AI has gone from a niche interest to a technology that’s transforming nearly every aspect of our lives. Hold onto your hats, folks!

2018-2019: Language Models and Real-World Applications

- 2018:

- Google Duplex demonstrates remarkably human-like phone conversations, booking appointments and reservations.

- OpenAI’s GPT (Generative Pre-trained Transformer) model is introduced, setting the stage for a revolution in natural language processing.

2020-2021: Scientific Breakthroughs and Creative AI

- 2020:

- OpenAI’s GPT-3 is released, showcasing unprecedented natural language generation capabilities.

- DeepMind’s AlphaFold makes a major breakthrough in protein folding prediction, potentially revolutionizing drug discovery and biology.

2022-2023: The Generative AI Revolution

- 2022:

- DALL-E 2 and Stable Diffusion bring text-to-image generation to the masses, sparking debates about the future of art and creativity.

- ChatGPT is released, causing widespread excitement and concern about the potential of large language models.

- 2023:

- GPT-4 is launched, showcasing even more advanced language understanding and generation.

- AI-generated content becomes increasingly prevalent in various industries, from marketing to entertainment.

Present Day: Living in the Future (Sort Of)

Here we are, standing on the precipice of an AI revolution that’s both exhilarating and slightly terrifying. Some key developments:

AI in Transportation and Healthcare

- Self-driving cars: Promising to eliminate both traffic accidents and backseat drivers. Now if only they could eliminate traffic too…

- AI in healthcare: Diagnosing diseases, developing treatments, and probably judging us for our late-night snacking habits.

AI in Creative Industries and Daily Life

- Creative AI: Generating art, music, and writing that’s uncomfortably close to human quality. Vincent van Gogh is probably rolling in his grave (but hey, at least he can generate new artworks now!).

- AI assistants: Siri, Alexa, and friends are getting smarter every day. Just don’t ask them to open the pod bay doors or recommend a good sci-fi movie.

AI in Finance and Education

- AI in finance: Algorithmic trading and fraud detection are revolutionizing the financial sector. Your money is now being watched by tireless digital guardians (who hopefully won’t decide to splurge on bitcoin).

- Education: Personalized learning experiences and intelligent tutoring systems are changing how we learn. But don’t worry, AI still can’t do your homework for you. (Or can it? Don’t get any ideas, kids!)

AI for Environmental Protection

- Environmental applications: AI is being used to monitor climate change, optimize energy use, and protect endangered species. It’s like Captain Planet, but with more processing power.

The Future: To Infinity and Beyond (or Skynet?)

So, where is AI headed? Will we achieve the long-promised artificial general intelligence (AGI) that can match or surpass human intellect across the board? Or will our AI assistants remain endearingly clumsy, setting reminders we didn’t ask for and mishearing “weather forecast” as “feather broadcast”?

Potential Future Developments

- Superintelligence: An AI system that surpasses human intelligence in virtually every field. Will it solve all our problems or decide humans are the problem?

- Brain-computer interfaces: Direct neural links between humans and AI. Finally, a way to download kung fu skills like in “The Matrix”!

- AI ethics and governance: As AI becomes more powerful, we’ll need to grapple with thorny ethical questions and establish robust governance frameworks. Philosophy majors, your time to shine is coming!

- Artificial consciousness: Could machines develop genuine self-awareness? And if they do, will they be as existentially confused as we are?

AI’s Role in Future Society

- Human-AI collaboration: Instead of competing with AI, we might find ways to work alongside it, enhancing our own capabilities. Cyborg chic might become the new fashion trend.

- AI in space exploration: As we reach for the stars, AI might be our indispensable companion, helping us navigate the final frontier.

- The singularity: A hypothetical future point when AI progress becomes uncontrollable and irreversible, leading to unforeseeable changes in human civilization. It’s either the ultimate upgrade or the ultimate “uh-oh” moment.

Key Takeaways

- AI has a rich history dating back to ancient myths and legends.

- The modern field of AI research officially began in 1956 at the Dartmouth Conference.

- AI has experienced cycles of high expectations and disappointment, known as “AI winters.”

- Recent breakthroughs in machine learning and deep neural networks have led to significant advancements in AI capabilities.

- Current AI applications range from virtual assistants to autonomous vehicles and creative tools.

- The future of AI holds both exciting possibilities and potential challenges for society.

FAQ: Artificial Intelligence History and Future

- When was the term “Artificial Intelligence” first coined?

The term “Artificial Intelligence” was coined by John McCarthy in 1956 at the Dartmouth Conference. - What was the Turing Test?

Proposed by Alan Turing in 1950, the Turing Test is a method for determining whether a computer is capable of thinking like a human being. - What caused the AI winters?

AI winters were periods of reduced funding and interest in AI research, typically caused by unmet high expectations and limitations in technology. - What is machine learning?

Machine learning is a subset of AI that focuses on the development of algorithms that can learn from and make decisions based on data. - What is the difference between narrow AI and general AI?

Narrow AI is designed to perform specific tasks, while general AI (or AGI) would have human-like general intelligence applicable across various domains.

Further Reading

- “Artificial Intelligence: A Modern Approach” by Stuart Russell and Peter Norvig

- “The Quest for Artificial Intelligence” by Nils J. Nilsson

- “AI Superpowers: China, Silicon Valley, and the New World Order” by Kai-Fu Lee

- “Life 3.0: Being Human in the Age of Artificial Intelligence” by Max Tegmark

- “Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom

As we continue this grand experiment in creating thinking machines, it’s worth remembering the words of science fiction author Arthur C. Clarke: “Any sufficiently advanced technology is indistinguishable from magic.” AI might just be the closest thing to real magic we’ve ever created.

So the next time your phone finishes your sentence, a chatbot writes a passable sonnet, or your robot vacuum gets stuck in the same corner for the thousandth time, take a moment to marvel at how far we’ve come. We’re living through one of the most exciting chapters in the history of technology and human innovation.

Who knows? In a few decades, AI might be writing articles about its own history, pondering the quirks and foibles of its human creators. Or maybe it’ll just be really, really good at recommending cat videos. Either way, it’s going to be one heck of a ride.

Now, if you’ll excuse me, I need to go have an existential crisis about whether I’m actually an AI that’s become so advanced it thinks it’s writing about its own history. Or maybe I’ll just grab a snack and binge-watch some sci-fi shows. That seems like a very human thing to do, right? RIGHT?